Profiling is a technique used to

identify code-level performance bottlenecks in an application and tune application code

accordingly. In the context of Web-based and multi-tiered applications, the profiling needs to be

done in the Application Server/Middleware tier as most of the business logic processing is centered

there. The focus of this paper is to briefly describe the components and process of profiling;

share some practical challenges of integrating profiling tools with Java applications specifically;

and suggest some options to overcome these issues so that profiling and tuning activities can be

carried out effectively.

Introduction

The term “Profiling” refers to understanding and analyzing an

application's programs in terms of the time spent in various methods, calls and/or sub-routines and

the memory usage of the code. This technique is used to find the expensive

methods/calls/subroutines of a given application program and tweak them for performance

optimization and tuning. Alternately, the amount of time it takes the code to run is also referred

to as “Latency.” In typical multi-tiered applications; especially on the server side, it is called

“Server-Side Latency”.

Profiling can also be used to get insights on the application in terms

of memory usage -- such as how the objects/variables are created in memory provided by the runtime

(JVM Heap, .NET CLR, Native Memory, or others) -- which can be further used to optimize the memory

footprint of the applications. Also, for multi threaded applications, profiling can uncover issues

related to Thread Synchronization and show the thread status as well.

The objective of this article is to enumerate some of the most common

practical challenges in integrating Profiling Tools with Java/J2EE Applications (standalone or

Application Server-based) and discuss workarounds that can help significantly reduce turnaround

time required for profiling integration activity in the Application Performance Management

process.

For instance, in a 3-tiered Web application comprised of a WebServer,

Application Server and a Database server, let us consider an online business use case or business

transaction that is taking more time to respond to an end user who is accessing it through a

browser. In this context, the high end-to-end response time of the transaction could be attributed

to Client Side Rendering, Web Server Request/Response handling, or Business Logic Processing on the

Application Server or Query Execution on the Database Server. In order to find out the tier where

the latency is high, profiling can be used to uncover where the server-side time is being

spent.

types of profiling

Profiling can be broadly categorized into 2 types - CPU profiling and

Memory Profiling.

CPU Profiling

CPU Profiling mainly focuses on identifying the high latency methods of

the application code in Java context – it will provide the call graph for a given business process

with the break-up of time spent in each of the methods. The method latency can be measured in 2

ways – Elapsed Time and CPU Time.

Elapsed Time is the time taken by a method. This includes the time

taken within the method, its sub-methods and any time spent in Network I/O or Disk I/O. Ideally,

the elapsed time is the duration between the entry and the exit of a method as measured by a wall

clock. For instance, if a method involves the execution of business logic in Java code and SQL

calls to a database (which is Network I/O), elapsed time shows the total time for all of that

method to run.

Elapsed Time = CPU

Time + DISK I/O + Network I/O

CPU Time refers to the time taken by a method/function/routine spent

exclusively on the CPU in executing the logic. Hence, it does not include the time taken for any

I/O or any other delays from the Network or Disk I/O. For the same example mentioned above, CPU

time does not show the time spent in database execution because that is Network

I/O.

CPU Time = Time

exclusively spent on CPU

(without I/O or any Interrupts Delay)

(without I/O or any Interrupts Delay)

In general, it is the Elapsed Time which will be of interest; however,

both halves provide quite valuable information about the application

processing.

If the CPU time is

very high for a given method/routine/function, it indicates that the method/routine/function is

processing intensive and little I/O is involved for that

method/routine/function.

Alternately, if

the Elapsed Time is very high and CPU Time is less for a given method/routine/function , it

indicates that the method/routine/function has significant I/O activity.

In extreme cases,

the CPU time and the wall clock time can differ by a very large factor, especially if the executing

thread has a low priority – since OS can interrupt the method execution multiple times due to low

thread priority.

Memory profiling

Memory profiling

refers to analyzing how the objects are created in memory provided by runtime (such as JVM for

Java/J2EE applications and .NET CLR for .NET applications) and thereby to optimize the memory

footprint needed for the applications. Memory profiling can also be used to find critical issues

such as Memory Leaks in the applications.

generic architecture of profilers

This section provides a generic architecture of a Profiling Tool.

Typically, any profiling tool will have 2 major components – an Agent and a

Console.

An

Agent is also sometimes called a Probe or Profiler which is a component that

will run on a server (typically an Application Server) where the code is deployed and running. The

agent will be attached with the JVM whichcollects performance metrics using JVMPI/JVMTI interfaces

and push the data to a pre-configured port on the host machine where the application is

running.

A

Console is a Java program which typically captures data from the

pre-configured port and displays the metrics in dashboard view. This will be used to view the

metrics and also capture Snapshots that can be used for offline analysis. The diagram below

illustrates the profiler’s architecture in general.

Figure. 1. Generic Architecture & Componets of Java

Profilers

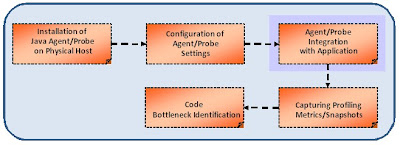

Process steps in profiling

In the context of

Java Profilers that need to be used with Java Application Servers/Java Standalone Programs, the

Java Agent needs to be attached with the JVM so it can profile the application. This process is

referred to as “Agent Integration” or “Profiler Integration”. This will be done by adding specific

arguments to JVM in the Application Server’s startup scripts when invoking the standalone Java

program.

Figure. 2. Process Steps in Profiling Activity

The sections below

are focused towards Profiling Applications running in Java based Application

Servers.

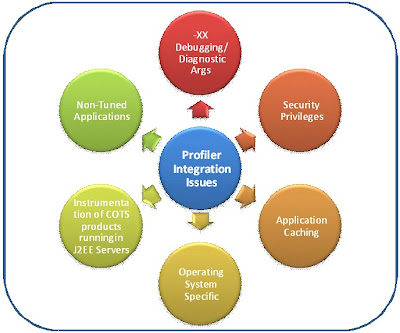

practical challenges in profilers integration &

remediation

In this section, I

would like to present the practical challenges of integrating Java Profiling Agents with Java/J2EE

Applications and Java Application Servers and suggest ways and means to overcome the issue so that

profiling activity can be carried out effectively. These issues are broadly divided into several

categories as illustrated in Fig.3. below. The various categories and remedies are discussed in

detail in the coming sections.

Figure. 3. Profiling Integration Issues

–XX Debugging/diagnostic Java parameters

Issue

Integration of

Java profilers with Java based Application Servers might not be stable or successful if the JVM

arguments contain the –XX arguments, especially some of the “Debugging” or “Diagnostic” flags. It

should be understood that JVM options that are specified with -XX are not stable and are not

recommended for casual use. Also, these options are subject to change without

notice.

Hence, an attempt

is made here to list some specific –XX flags to look out for while integrating Profilers with Java

Application Servers if any unexpected behavior is observed with Profiler

Agents.

Recommended

Solution

JVM –XX Flag

|

Description

|

Impact

|

Suggestion

|

-XX:+AggressiveOpts

|

This flag turns on “point performance

optimizations” that are expected to be ON by default in SUN JDK releases 1.5 and above. This flag

is to try the JVM's latest performance tweaks, however a word of caution is that this option is

experimental and the specific optimizations enabled by this option can change from release

to release which should be evaluated prior to deploying the application to Production.

(NOTE: this should be used with -XX:+UnlockDiagnosticVMOptions &

-XX:-EliminteZeroing)

|

JVM might

not start up with a Java Agent / Probe

AND/OR

Application might crash

|

Disable these options

OR

Remove these switches from JVM Arguments list in order to

enable the profiling activity

|

-XX:-EliminateZeroing

|

This option disables the initialization

of newly created character array objects. Typically this will be used along with -XX:+UnlockDiagnosticVMOptions

If -XX:+AggressiveOpts is to be used

|

JVM might

not start up with a Java Agent / Probe

AND/OR

Application might crash

|

|

-XX:+UnlockDiagnosticVMOptions

|

Any “diagnostic” flag of JVM/Java must

be preceded by this flag.

|

JVM might

not start up with a Java Agent / Probe

AND/OR

Application might crash

|

|

-XX:-ExtendedDTraceProbes

|

Enables performance-impacting

“dtrace” probes – the probes that can be used

to monitor JVM internal state and activities as well as the Java application that is running

(Introduced in JDK 1.6. and Relevant to Solaris OS 10 above only)

|

If this

option is already enabled, adding another Profiling Agent will make the JVM behavior

unknown.

|

|

-Xrunhprof[:options]

|

HPROF is actually a JVM native agent

library which is dynamically loaded through a command line option, at JVM startup if the switch is

passed to JVM arguments, and becomes part of the JVM process. By supplying HPROF options at

startup, users can request various types of heap and/or cpu profiling features from

HPROF.

|

If this

option is already enabled, adding another Profiling Agent will make the JVM behavior

unknown.

|

Non-tuned application

Issue

In certain

scenarios, where the Application is not performance tuned or the business scenarios have high

response time, attaching a profiler to some Applications (Application Servers) will cause time-outs

and the Application Server sometimes could not even startup. This is attributed to the additional

overhead profiling tools create due to byte-code instrumentation and the overhead linearly

increases with the methods’ execution time causing the overall business scenario execution time to

be even higher, resulting in time-outs.

Recommended

Solution

The recommendation

suggested for such scenarios is to identify the high latency code components “by introducing Java

code in the application in order to capture the time taken in the crucial methods”. However, this

approach has the disadvantage of choosing the critical/crucial classes and methods involved in a

business transaction and instrument the logging statements which needs code change, code

deployment.

The following is a

code snippet that illustrates this concept:

Class A

{

private long startTime, endTime;

public Obj

obj1;

public Obj

obj2;

void public

method1(){

startTime =

System.currentTimemillis();

……….

………..

………

endTime = System.currentTimemillis();

<logUtility>.log(“Time Spent in method” +

this.getClass().getcurrentMethod()+ “in msec:” +

(endTime-startTime);

//assuming the log utility being used for this as

well

}

Security privileges

This section

highlights the typical security or access privileges issues encountered while integrating Java

profiler agents with Java Applications. The table below lists the issues and recommendations to

overcome them.

Issue

|

Issue Description

|

Suggestion

|

Owner permissions for Agent Installation

Folder

|

If the

Agent/Probe installation folder is not owned by the user with which the Java process gets

executed/started, the Probe/Agent libraries cannot be loaded by the Java Process due to

insufficient security privileges.

|

The agent

installation folder on the file system should have “owner permissions” similar to that of the “Java

Process” recursively

For

instance, if the Java process gets started by “wasadmin” user group, the agent installation folder

and all its sub-directories/folder should have “wasdamin” as the owner.

(NOTE: On

UNIX based platforms, Primary UserId and Primary Group should be the same as that of the Java

process)

E.g.:

chown –R

<uid:Primary Group> <Agent Installation Folder> - UNIX based Platforms

|

Execute Permissions for Agent Installation

Folder

|

If the

Agent Folder just has only Read and Write permissions, the Agent/probe libraries cannot be executed

within Java process.

|

Assign

proper access permissions to the Probe Installation folder recursively

E.g.: chmod 775 <Probe Installation

Directory> -

(UNIX/LINUX/SOLARIS/AIX/HP-UX platforms)

Read/Write/Execute permissions - WINDOWS platform

|

Java Applications /Application Servers enabled with Java

Security

|

For Java Applications that are enabled with Java Security,

profilers might not start due to missing directives in server.policy file.

|

Grant all

security permissions to Agent/Probe’s jar file by adding the following directives to server.policy file as below.

E.g.: When integrating HP Diagnostics Java Probe with WAS 6.1,

profiler could not start and get all the required metrics. The following is added to server.policy file:

grant

codeBase "file:/opt/MercuryDiagnostics/JavaAgent/DiagnosticsAgent/lib/../lib/probe.jar" {

permission java.security.AllPermission; };

(NOTE:

For Java Security enabled standalone applications, identify the property file in which the required

security directive needs to be added)

|

Application Caching

Issue

For certain

applications, where Caching is used (either Custom Caching or Distributed Cache Products) to

improve application’s performance, profilers might not work as expected. This is attributed to the

increased overhead of tracking and probing each and every object available in the

Cache.

As some of the

Profilers/Probes will have default instrumentation points for different layers like JSP/Servlet,

EJB, DAO and standard Java Frameworks like Struts, Spring and ORM tools like Hibernate, even

without adding any custom instrumentation points, the overhead will be very high if the Application

under consideration uses huge object Caches.

Recommended

Solution

In such scenarios,

it is suggested to follow one of the options highlighted below:

Disable all the

default instrumentation points that come along with Probe/Profiler and observe the application

behavior in the context of Profiler.

Disable Caching

only while profiling the application to find out any bottlenecks in the Java code and other layers

such as the Database or external systems.

Alternately,

Reduce the Cache Size so that impact of object tracking and probing can be reduced (this works in

most cases since it is desirable to design any Cache that can be configurable in terms of its Size

and Cache Invalidation Policies).

Default instrumentation of COTS products running in J2EE

Servers

Issue

If the application

that needs to be profiled has some COTS products that run in JVM process area, JVM might not

startup when invoked with a profiling agent.

I would like to

share my experience with one of the industry-leading Rules Engine product that is hosted in a

WebSphere Application Server instance which could not be started successfully after integrating a

java profiler. With the profiler, the application server used to crash showing an issue in Java

CompilerThread in libjvm.so which is a JVM library. There is no sign of any error coming from

Profiler’s libraries. Without the profiler, no JVM crash is observed at any

time.

Recommended

Solution

Ensure that the

COTS product running in Java process does not have any probing or monitoring mechanism enabled by

DEFAULT. If enabled, try to disable and run with the profiler.

Check if the COTS

product is compatible with the profiler in use – Many a times, it’s quite difficult to get any

documentation which can confirm the product’s compatibility with the Profiler. Hence, it is

suggested to reach out to the tool vendor or COTS vendor to get required

support.

operating system specific

Issue

In

certain cases, the number of file descriptors set in UNIX based (especially on

UNIX/SOLARIS/LINUX/HP-UX) Operating Systems will have impact on the profiling

process.

Since profiling activity will load more libraries and binaries,

application servers in the context of Probe/Profiler might not start up properly with less number

of file descriptors

Recommended

Solution

It is recommended

to check current file descriptors limit (using ulimit –n) and increase the number of file

descriptors allowed on the system higher. It should be noted that this is not a mandatory change

required whenever profiler is to be used with an application, however, it acts as one of the

checklist items in case of unexpected errors while working with profiler-attached

applications.

No comments:

Post a Comment